Software-defined Storage = Virtualized Storage = vSAN?

I recently get more and more into discussions around Software-defined storage and storage virtualization. Is it the same, is it partly the same, is it something totally different? In this blog post I’ll try to shed some light on the technologies of today around these buzzwords and try to make some sense at the same time.

What we used to call virtualizing storage

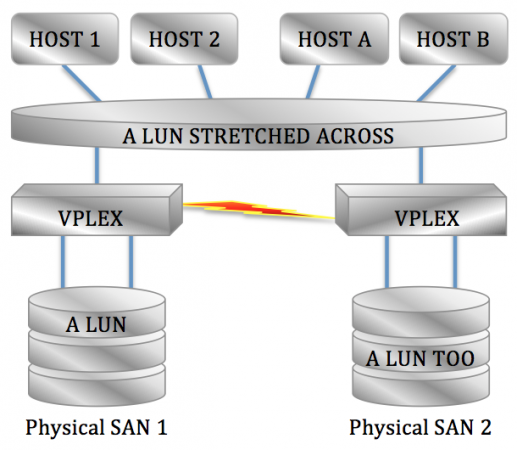

Before we launched the idea of the Software-defined Datacenter (SDDC) and Software-defined Storage (SDS), we were already putting hardware between storage and hosts creating an abstraction layer between the two. Good examples of this technologies are IBM’s SVC and EMC’s VPLEX.

A good example of abstraction of storage from the underlying hardware – VPLEX in a metro configuration abstracting the underlying storage to add value of stretching a LUN across phyisical locations

These technologies look south for their storage requirements, abstract this storage and present this abstracted storage north bound to the hosts. In my opinion this is an abstraction layer, but a lot of people define this as a virtualization layer. This kind of makes sense; just like our well-known and trusted vSphere we use software to separate the physical storage from the hosts using them.

Nowadays we see other technologies arising, where we no longer have dedicated hardware underneath, but generic local disks under generic nodes where the software does not just abstract, but even contains all the intelligence normally found in SANs and NASses. We could call this a virtual SAN.

The virtual SAN

Built on the “original” virtualized storage, we can continue virtualization by incorporating not only an abstraction layer between SAN and hosts, but we can actually build an entire SAN in software.

An example of a virtual SAN – Note there is no physical array, just generic disks and an x86 hardware layer (that runs the virtual SAN)

The result will be a bunch of generic servers with local disks, where the software running on these servers will not “simply” deliver this storage upwards to the hosts, but we can build a full blown SAN in software. Examples of this technology are VMware’s vSAN and EMC’s ScaleIO.

Software-defined Storage

Then there was Software-defined Storage. Is this a development continuing on after we have a virtual SAN? Well actually, not necessarily… Yes you can use virtual SANs in SDS, but it is by no means a requirement… As the name states, we’re talking about “Software-defined”. Meaning defined by software, not necessarily built from software. It is simply a programmatic way of getting storage up to the hosts. So this can be a full software stack, but it can also be dedicated storage hardware as we know today (SAN, NAS) programmatically chopped up and served to the host(s).

So software-defined storage actually has little or even nothing to do with virtualized storage, yet they’re heavily intertwined. That’s because as we develop full-software SANs and NASses, naturally we build in the ability to control them programmatically, making them software-defined.

SDS – Note how it programmatically works with the physical SAN infrastructure, and there is a storage present (this can be a physical SAN, a virtual SAN or a bit of both.

Is a full software SAN with SDS capabilites the solution everyone should aim for? No way. Will it prove to be extremely valuable for a lot of use cases? You bet! IT all comes down to the right tool for the right job. In some cases a virtual SAN may never become efficient, in others it may become very efficient very soon. I’ll dive into this in the next few posts.

Managing the Control Plane

Something that was introduced around Software-defined Storage is the Control- and Data plane. The Control plane is some tooling that sits along the sideline, looking at storage and hosts. It programs storage and storage networks to project storage to the hosts. he prime example of this technology is EMC’s ViPR (also see Software-defined Storage: Fairy tale or Reality?).

Notice one thing? The software in this case delivers programmatic control over the storage layer, but does NOT sit in between the data paths. It is more of an orchestration tool, where all data paths remain direct; no software layer in between.

Mingling in the data path: The Data Plane

Where you can sit along the side line telling the storage what to do (Control Plane) you can also decide to mingle in the data path. This is what we call the Data Plane. EMC’s ViPR for example delivers “object storage on file” as part of the Data plane services. In this case you DO sit in the data path, and modify/virtualize/enhance the data as it passes.

Software-defined Storage that features the Control- AND the Data Plane. Note how the Data Plane sits in the data path, enriching as data passes.

It is very important to understand the difference; the control plane programs, but does not mingle in the data path. The Data plane does. Using the Data plane puts us even a little closer to the mix of virtual SAN and SDS. Ultimately it will deliver the storage soup of our favorite flavor!

So what if we extended this to Software-defined Networking?

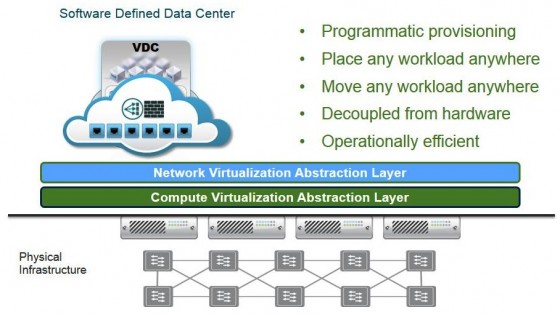

In many respects Software-defined Networking is very much like Software-defined Storage. Networking too uses dedicated devices that connect to multiple hosts. VMware has been showing a HUGE amount of stuff around NSX on VMworld 2013… So where dows this sit in our idea of virtualization, control plane and data plane?

What I’ve seen so far, NSX mainly delivers virtual switches, virtual routers, virtual firewalls etc that can be controlled programmatic. Programmatic, so it *is* Software-defined Networking. All devices are delivered virtual, which means they have virtualized networking components and require just plain L2/L3 connectivity underneath. It looks a lot like virtualized storage where we have all tricks in software and just generic disks underneath.

An image I “leased” from VMware. It clearly states everything is not just programmed but also BUILT in software. There does not seem to be any connection to the physical netwokring devices – Which make NSX a pure virtualized network (that can be under API control)

But will that be the right direction? Just like it’s not the right direction for storage for all use cases, I suspect it will not be the right direction for networking in all use cases. Just like storage, there is a reason why you’d want to stick to more or less dedicated hardware… Here’s to hoping VMware NSX will also extend its control plane to program dedicated hardware. Looking at the number of vendors partnering up that may be the case.

Best of all worlds

Looking at development in storage like VPLEX, ViPR, vSAN and ScaleIO, I cannot wait to see how quickly things will develop. The combination surely is a powerful one. By enabling both the Control- and the Data plane to work with both dedicated hardware and generic disks, we can build solutions that will be a snug fit for a very large range of use cases around storage.

LinkedIn

LinkedIn Twitter

Twitter